Let's face it: Despite the kind words, the condolences, and even the armchair indignation, no one cares about the victims. Apart from admirable people who dedicate themselves, with very limited means, to comforting, accompanying and, when possible, healing them, the rest of society considers victims a burden. Grief is getting shorter and shorter, and understanding for the effects of violence, abuse or severe exposure of privacy is almost as quick. During investigations into abuse that occurred in the Catholic Church, I read a member of the curia saying that yes, maybe the Church had not done as well as it could have, but that we had had enough of the victims, thirty years later, vomiting up their unpleasant memories when what they needed to do was to get over it.

I doubt very much that a brain in development - and here someone with more knowledge than me should contribute - ever recovers from abuse, from terrible humiliation, from incomprehensible violence. I am convinced that such a brain is reprogrammed, that it is rewired from the trauma and that this person is different from who he or she could have become without this deep pain. If the rest of their life is marked by addiction, unhealthy perfectionism, problems with trusting other people and a black hole of anguish that sucks out any shred of happiness, it is not only useless but painful to blame victims for a lack of discipline, for not knowing how to control their emotions, or for not having the courage to overcome it.

Big tech companies have incorporated the humanist story as a selling point as deeply as they implement environmental protection measures: if and only if they do not harm their business

In the humanist current of technological development, Man is put at the centre. I often wonder whether this movement literally refers to men, and not to women or to the victims of technology, who in many cases, are the same people. Big tech companies have recently incorporated this narrative as a selling point as thoroughly as they implement environmental protection measures: if, and only if, they do not harm their business in any way. It is one thing to spend a few extra dollars to make a show of it; it is quite another for the bottom line to suffer as a result of having a conscience.

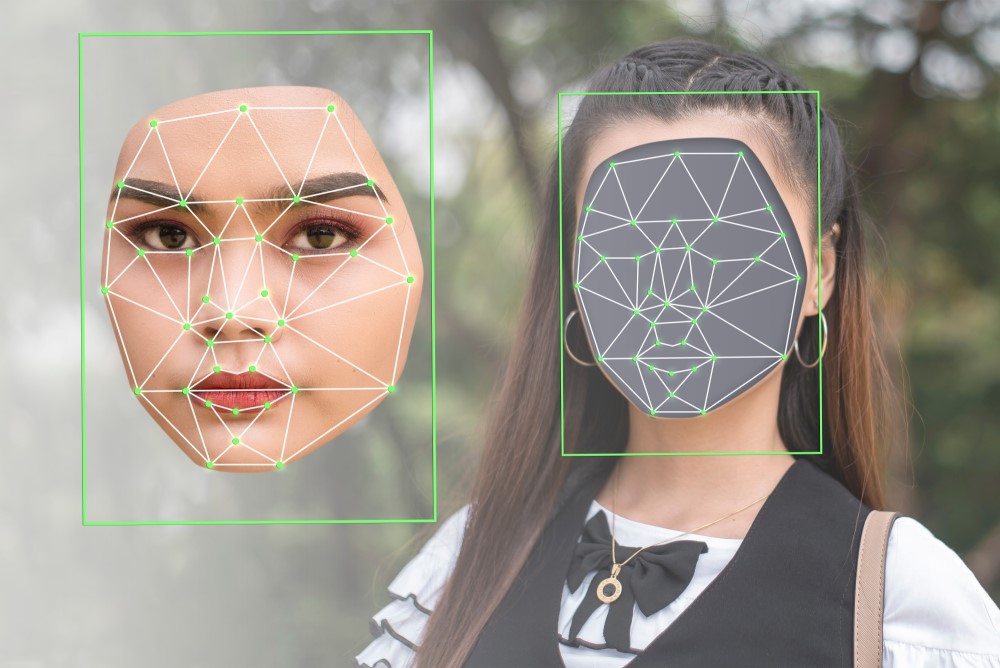

Foundational AI models, the ones that are like Swiss Army knives, which can hang a painting or cut a sausage, were not developed with Man at the centre. In one of the many conferences I attend to see if they can enlighten me, a scientist of adversarial neural networks (the ones behind the generation of images and video) whose name I can't remember (this happens to me frequently and diminishes my ability to be considered an educated person) made it clear that they first develop technology to make it work - not to make it fair, ethical, auditable or to prevent it from being used, by design and by default, for non-virtuous purposes. That's not their job, he responded when I questioned him, with a certain tone of "keep your grubby lawyer hands off my cool stuff."

No one thought that releasing the basic generative applications of AI for universal use would mean that many people would use it to do evil, to humiliate, annoy or insult

Those AIs that, at the time, were making monsters out of bad nightmares, from one day to the next, due to the tsunami effect of these technologies, became capable of making versions of classic films, of perfectly imitating the voice of anyone and, moreover, in any language not spoken by the person being imitated. No one thought, or someone did and decided to ignore it, that these tools should not be consumer software available to any app developer who could or wanted to connect their application to the AI API and thus use it as a powerful tool to do whatever nonsense they could think of. Nobody thought that releasing the basic generative applications of AI for universal use would mean that many people would use it to do evil, to humiliate, annoy or insult. Their PR offices could always blame it on human nature and users who insist on misusing technology made for love.

And, of course, no one thought about the minors in Almendralejo; no one thought their schoolmates and close friends would bring out the worst instincts of adolescence and - thanks to a tool that should not have been within their reach - would ruin their lives. And no, not in a child's game, but in an ancient hierarchical ritual of humiliation of women that seeks to lock us in the home, which we should never have left.

No one thought about the minors in Almendralejo; no one thought that their schoolmates and close friends, would bring out the worst instincts of adolescence and - thanks to a tool that should not have been within their reach - would ruin their lives

These minors are not the first, nor the only ones, of the many victims we will see on news items full of short-lived indignation, who will be told to go to court to be revictimised again without remission. Because there are no criminal offences to punish synthetic pornography, plus we lack sufficient means to carry out forensic examinations of victims' and perpetrators' phones, if they are ever identified, and enough staff to process all these cases with the speed they deserve. All of these cases (and I hope to be proven wrong) will end up in a file, while aggressors have a good laugh and get away with it, a dangerous impunity that encourages repetition and even more abuse of the victims. And the victims will be told to get over it, that they shouldn't be complaining about the same thing for the rest of their lives. Or that's what will be thought.

All this leads me to say that it is best if there are no victims in the first place. And, believe it or not, that is something that is perfectly feasible without structural changes. Less than a year ago, we were living without generative AIs, and no one can argue that using them is a matter of life and death. The law could limit these AI tools to professional and virtuous environments, only by known developers, and for products whose purposes don't violate public order or privacy, and aren't criminal; such measures would be more than enough. The girls from Almendralejo would have appreciated it.