Risks are often presented in a simplified manner, as if things could be defined as safe or unsafe, good or bad, which distorts their true complexity and makes it difficult to make informed decisions about them. In reality, we face complex problems for which there is no single right way to act. Moreover, we know that people can process complex information about risks, as long as it is explained in an understandable way.

In the first part of this series, we presented seven basic recommendations for reporting on the risks from scientific articles in an comprehensible way, particularly those referring to statistical estimates. Then, in the second guide, we explored six different meanings of "risk" in headlines.

This third guide highlights the essential points to ensure that risks are explained clearly while also reflecting their complexity. Depending on the story you are covering, some points may be more relevant than others.

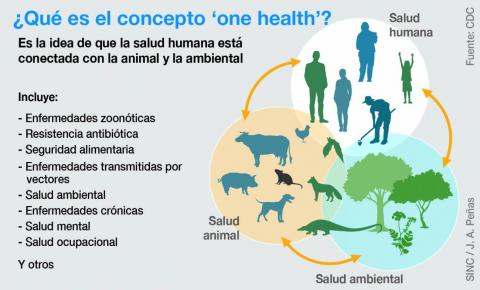

1. Clarify the scope of the risk

Imagine you are covering a story about water pollution in a rural community. Identify the risk or outcome involved: is it related to adverse health effects or its impact on agriculture?

Clarify the factors that might contribute to the problem, such as the discharge of industrial waste into the river or the lack of wastewater treatment systems.

Additionally, specify who might be affected, for example, local residents or a broader community. It might also be necessary to clarify if certain age groups are particularly impacted, such as children or the elderly.

2. Decide if you need to include numbers

When covering a story about risks, consider whether it’s necessary to include numerical concepts, such as the following.

Relative and absolute risks

Relative risks only indicate how much larger or smaller a risk is relative to another, and do not provide information about the actual size of the risk. An absolute risk, on the other hand, is the probability of an event occurring.

-

Example: Hormone replacement therapy increases the risk of developing breast cancer by 30%. This is the relative risk, but to assess it, we need to know the absolute risk in each group being compared. For instance, the probability of developing breast cancer in women without hormone therapy (3 out of 50) and in women with therapy (4 out of 50).

Expected frequencies and percentages

The presentation of numbers can influence the perception of risk. It is better to use expected frequencies rather than percentages: 1 in 1000, instead of 0.1%.

When making comparisons between groups, frequencies should have the same denominator: 1 in 50 versus 2 in 50 (not 1 in 50 versus 1 in 25).

Denominator neglect

It occurs when we focus on the number of events that have occurred (the numerator) without considering the total number of opportunities for those events to occur (the denominator).

-

E.g.: 8 motorcycle accidents compared to 100 car accidents daily does not mean that motorcycles are safer; we need to know the number of people riding motorcycles and the number of people driving cars.

Averages and recurrent intervals

The recurrence interval is the average period of time in which an event repeats. Averages and recurrence intervals are not sufficient to reflect what actually happens in reality, as they can hide true variations, such as infrequent but extreme events. For example, even if the average precipitation in a region is within expected levels, the average alone could hide the fact that some areas are experiencing droughts while others are facing floods.

-

E.g.: an average global temperature increase of 2°C does not reflect the extreme temperatures. It is important to explain that this figure is linked to an increase in the frequency and severity of extreme temperatures.

Single event probabilities

A one-time event’s chance tells us whether it might occur now; it doesn’t provide information about its duration, magnitude, or past occurrences.

-

E.g..: A 40% chance of flooding does not mean that 40% of the area will flood, nor that it will rain 40% of the day. It means that, in 4 out of every 10 warnings like this, flooding will occur.

Conditional probabilities

It is the probability that an event will occur, given that another event (with a different probability) has already occurred. The most common conditional probabilities are false negatives or positives in laboratory tests or alarm systems. A real alert does not necessarily mean a real threat. A positive result does not guarantee that it is a true positive.

-

E.g.: In an antibody test that assesses whether people have immunity to a virus, the probability that a person with a positive result actually has immunity (i.e., a true positive) depends on 1) the prevalence of the virus in the population, that is, the probability that a person has the virus, and 2) the probability that the test accurately detects positive cases. This second probability is conditioned by the first. The lower the prevalence, the higher the frequency of false positives, regardless of the quality of the test. This is because, with low prevalence, there are fewer people with the virus in the population, which increases the proportion of false positives compared to true positives. This infographic developed by the Winton Centre explains an example in detail.

3. Explore what people want to know or understand

Identifying what people need to know will help you choose the key information.

Examples:

-

In a community with a river contaminated by lead, people would want to know: What factors are contributing to the river's contamination? What are the health consequences of drinking water contaminated with lead? How is it determined if the water is contaminated?

-

Regarding the use of artificial intelligence in classrooms, people would want to know: What are the effects of AI on schoolwork, mental health, inequalities, or other aspects? How much can we trust the accuracy of the information provided by AI?

-

In a coastal community experiencing an increase in the frequency and intensity of storms, people would want to know: What are the causes? What are the potential consequences for their properties? When should they evacuate?

4. Identify the emotions linked to risk: what are people feeling and why?

Risk is often associated with emotions that can influence decision-making. Acknowledging these emotions helps to reveal the perspective of those facing the risk and provides context to the story. Additionally, identifying these emotions could help you prioritize the information to cover.

Examples:

-

Many people refused the COVID-19 vaccine due to fear of adverse effects. This highlights the need to explain the magnitude of the potential benefits versus the potential harms of the vaccine.

-

A community may be angry about the construction of a nuclear plant in their area. This emphasizes the need to explain who decided to build the plant and how: Was it the government, private companies, or the residents?

5. Assess whether there is a decision to be made and what the options are

Often, understanding a risk is necessary for making an informed decision, aiming to make it as informed as possible, or at least well-informed.

Examples:

-

Land owners need to decide whether to lease their land for the installation of solar panels, despite potential damage to agriculture.

-

In 2021, several countries needed to decide whether to continue administering the AstraZeneca COVID-19 vaccine after it was associated with an increased risk of blood clots.

-

Residents of a community need to decide whether to evacuate or stay in their homes in the face of a high-risk alert for an impending volcanic eruption.

Frequently, when people do not take action in response to a risk, it is not because they do not understand the risk, but because they lack alternative actions or have chosen not to act after considering all factors.

6. Present balanced information about the benefits, harms and costs that need to be weighed up

When the audience needs to evaluate risks to make decisions, it’s important to explain the potential benefits, harms and costs of each option, showing the complexity of scenarios where there is rarely a clear-cut or single correct answer. Most of the time, one must weigh the pros and cons.

Additionally, it's crucial to clarify the perspective from which this judgement is made: Is it from the local government, the private sector, the community, a specific country, or another entity? The perception of what is good or bad can change depending on the perspective.

Examples:

-

Evacuating an area in response to a volcanic alert offers the benefit of safeguarding lives but comes at the cost of abandoning homes—potentially exposing them to theft—and dealing with the issue of finding alternative accommodation. Not evacuating preserves the safety of property but risks personal safety and even death.

-

Conventional agriculture ensures food security but may generate lower economic profits. On the other hand, leasing land for solar panels yields more income but impacts food production. A third alternative might involve integrating solar panels in ways that offer additional benefits, such as protection against heatwaves and frost.

When the probabilities of benefits and harms are known, they should be presented using numbers.

-

For every 100,000 people aged 59-69 vaccinated with the AstraZeneca vaccine, 10 avoid hospitalization, while 0.4 develop blood clots associated with the vaccine. This is a simplified example of the explanation provided by the Winton Centre, detailed further here.

It is necessary to emphasize who is making the judgement for a decision. For some countries, the best decision was to suspend the AstraZeneca vaccine because they had other options available. For others, this was not the best decision, as the lack of alternative vaccines meant that the harm from discontinuing it would be much greater than the potential risks associated with vaccination.

7. Anticipate misconceptions about risk and address them

The information we access may be based on low-quality evidence or be false. Additionally, we have cognitive biases, meaning our thinking can be skewed if we rely on past experiences or feelings too much, instead of viewing things objectively. Anticipating these cognitive biases will allow you to explain the issue more effectively.

Examples:

-

A community may not be concerned about severe flooding because they have never experienced it. This is an example of optimistic bias. It is important to explain that past safety does not guarantee future safety.

-

In Mexico, two earthquakes occurred on September 19 in different years, 1985 and 2017, leading some people to believe there is a higher probability of earthquakes in September. This reflects the tendency to perceive patterns in randomness. It is important to explain that, although our minds may identify patterns, random events, such as earthquakes, remain random.

8. Explain the uncertainties

All risks involve uncertainties. It was once believed that discussing uncertainty could drastically reduce trust in information. However, studies now show this is not the case, so there is no excuse for omitting uncertainty when explaining risks.

When communicating quantitative risks, that is, probabilities:

- Explain the uncertainty about the precision of the numbers. Present a rounded number followed by the range within which that number could vary. E.g.: 13 cases are expected (10–20) per 100 people.

- Explain the uncertainty about the quality of the evidence supporting that number. Particularly, indicate when the quality of the evidence is low, as people might otherwise place too much weight on it in their decision-making.

To explain uncertainties beyond the numbers, related to unknown factors:

- Say what is known, what is not known, and what the current recommendation is.

- When uncertainty is high, clarify that the evidence could change over time, and therefore, decisions may also need to change.

(This strategy was adopted by Lord Krebs, head of the UK Food Standards Agency, in several crises in the 2000s).

Examples:

-

When a Category 5 hurricane is forecasted, it might end up being a lower category or exceed expectations. It’s important to note that while estimates of environmental events can be based on past occurrences, there is uncertainty due to the many changing variables involved.

-

The integration of artificial intelligence could lead to consequences that we cannot yet anticipate due to a lack of data and experience. There is uncertainty because there is still too much unknown. In such cases, it should be clarified that the evidence on risks may evolve over time, and recommendations might also change.

-

A study finds that drinking alcohol even in small amounts increases the probability of developing several diseases. This probability is not a fixed value but a range that should be presented.

The information in this article is based on the Risk know-how framework. Here you will find more detailed explanations of all the concepts covered, along with definitions, examples, and resources to help you understand and communicate risks.

The platform includes a global library of resources that you can explore by topic or sector to find videos, infographics and other materials developed by various organizations to improve risk understanding. For example, RealRisk helps you extract numbers from scientific articles to transform relative risks into absolute ones, accompanied by charts and clear sentences.

The framework was developed by academics and individuals from communities facing risks in various sectors: health, environment, safety at work, safety at sea, fishing, technology, and more. It was created as part of the Risk know-how initiative, a collaboration between Sense about Science in the UK and the Institute for the Public Understanding of Risk (IPUR) in Singapore, and funded by the Lloyd's Register Foundation.

*We are interested in improving this guide with feedback from journalists who use it, to continue improving the quality of risk journalism. Please share your experience using this guide, any questions, or comments at: maricarmen@senseaboutscience.org.

This article has been reviewed by Leonor Sierra.

References:

- Schneider C.R. et. al. (2024) Communication of statistics and evidence in times of crisis. Annual Review of Statistics and Its Application. 11:1-26

- Freeman, A.L.J., Spiegelhalter, D.J. Communicating health risks in science publications: time for everyone to take responsibility. BMC Med 16, 207 (2018).

- Spiegelhalter D. (2017) Risk and uncertainty communication. Annual Review of Statistics and Its Application, 4: 31-60

- Gigerenzer, G. (2014). Risk savvy: How to make good decisions. Penguin Books.