Hybrid visual detection system proposed for autonomous vehicles

Combining two types of cameras could enable autonomous vehicles to detect objects accurately and efficiently, say researchers in Switzerland in a study published in Nature. Autonomous vehicles need visual systems capable of detecting fast-moving objects without sacrificing image quality. The system described combines a colour camera with a reduced frame rate - to lower the bandwidth needed to process images - and an event camera, which detects fast movements - such as pedestrians or vehicles - and compensates for the higher latency of the colour camera.

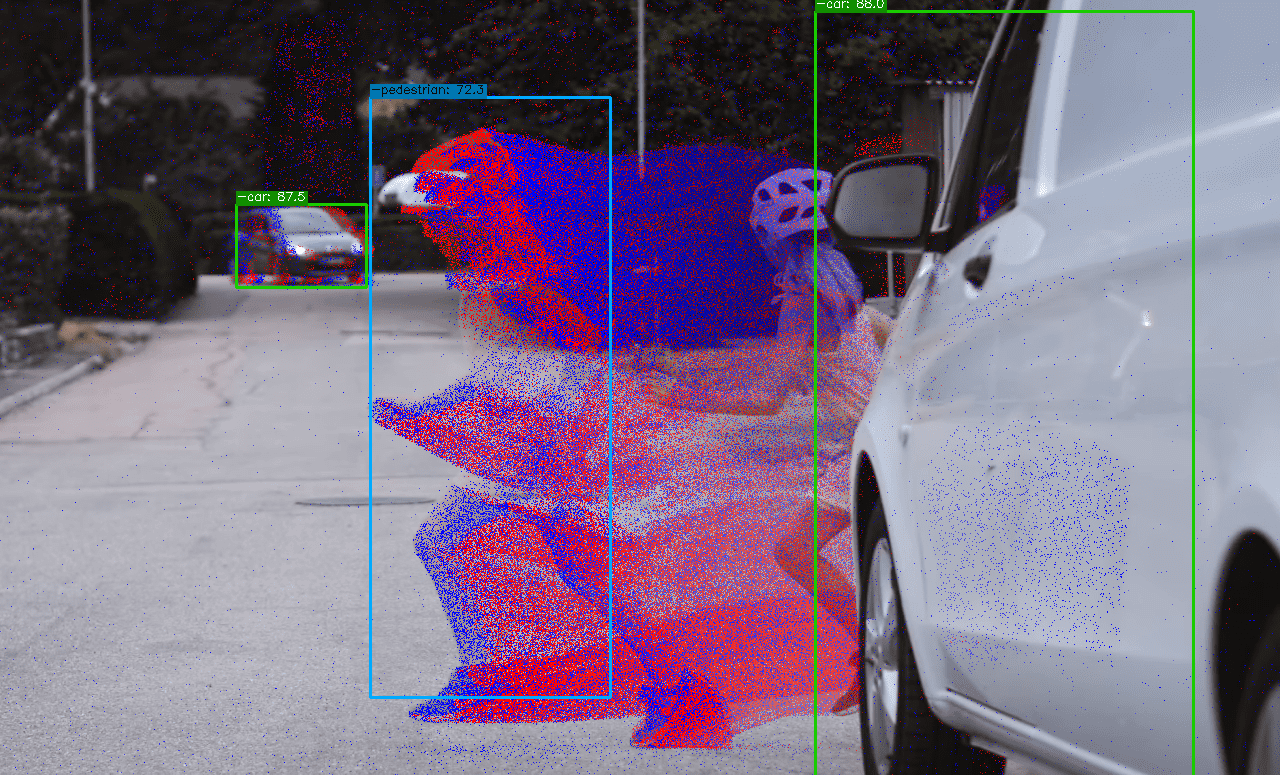

The image shows both the colour information from the colour camera and the events (blue and red dots) from the event camera generated by a running pedestrian. Credit: Robotics and Perception Group, University of Zurich, Switzerland.

Angélica Reyes - cámara coche autónomo EN

Angélica Reyes

Lecturer in the Department of Computer Architecture at the Polytechnic University of Catalonia and researcher in the Intelligent Communications and Avionics for Robust Unmanned Aerial Systems group

The development of intelligent transport systems is aimed at providing real-time traffic information services to all road users, from autonomous vehicles, pedestrians, to the most vulnerable users, such as people with disabilities. In an ecosystem where vehicles will communicate with other vehicles and with different road infrastructures, it becomes crucial that vehicles are able to analyse and process the information received in order to make decisions. However, features such as high vehicle speeds, constant mobility, varying traffic density, etc., pose major challenges.

The paper by Daniel Gehrig and Davide Scaramuzza presents an interesting advance in automotive vision using event cameras combined with RGB cameras that enhance real-time perception with high resolution and low latency, which is crucial for fast and accurate object detection in conditions of high speed and abrupt illumination changes. The authors use a convolutional neural network (CNN) to process images and an asynchronous graph neural network (GNN) to process events.

Daniel Gehrig & Davide Scaramuzza.

- Research article

- Peer reviewed