Reaction: An artificial intelligence method shows human-like generalization ability

In a work published in the journal Nature, two researchers from New York University (USA) and Pompeu Fabra University in Barcelona claim to have demonstrated that an artificial neural network can have systematic generalization capabilities similar to human ones, that is, to learn new concepts and combine them with existing ones. This statement calls into question the 35-year-old idea that neural networks are not viable models of the human mind.

Teodoro - IA (EN)

Teodoro Calonge

Professor of the Department of Computer Science at the University of Valladolid

I find the article ingenious, how from artificial neural networks (ANNs) it tries to give an explanation of how they themselves work, hence the prefix 'meta' [in the title of the article]. This is what at first glance it tries to offer in its abstract. However, what he actually does is to compare the predictive ability of generative neural networks (GANs) with that of humans. To do this he assembles a battery of psychology-based tests, that is, he tries to compare the response of GANs with those obtained in the equivalent psychological studies with humans about intelligence.

I have had a chance to take a look at the code with which they have implemented the GANs. I found that they have used PyTorch for it. From a technical point of view, it seems to me to be flawless.

However, the GANs have aroused a great deal of expectation that should be qualified to avoid the 'soufflé effect'. ANNs, in general, are based on learning. This is usually based on data and more data, i.e., it is usually raw information, which implies a huge numerical amount to provide certain knowledge. And this explains why GANs such as ChatGPT have now emerged. They were models already known at a theoretical level, but which have had to wait for recent advances in hardware and software - in particular, from Transformers (2018) - to be able to run them in a reasonable time through parallel processing.

Returning to the central theme of the article, I think it is worth clarifying that it is a first work. I couldn't say if it is a line of research that will offer great advances in the short or medium term. Of course, I don't think it will answer the questions that are currently being raised in the field of 'explainability of artificial intelligence' and, in particular, in the field of artificial intelligence and medicine, where the main point is of suspicion.

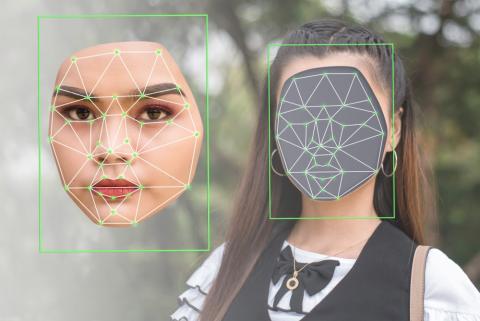

In general, biohealth professionals distrust this 'black box' (ANN), which gives very good results, but does not tell them much about what it is based on to obtain that level of success. Advances in 'explainability' are beginning to offer small results that are proving useful in certain biomedical research. For example, ANN-based medical image classification systems are being complemented with algorithms that analyze the internal representation of those images by ANNs. Thus, for example, the areas of a chest X-ray triggering an ANN are being delimited, in order to detect a particular type of pneumonia. This information can complement what a pulmonologist already knew and even help verify if areas not taken into account before, but that do count for an ANN, are really influential from a medical point of view in the diagnosis of the disease.

Lake and Baroni.

- Research article

- Peer reviewed