The emergence of Artificial Intelligence (AI) is revolutionising science and economics at an unprecedented speed. ChatGPT, the most visible example, has become the fastest adopted technology in human history. The recent Nobel Prizes in Physics and Chemistry, awarded for crucial breakthroughs in neural networks and their application to protein structure prediction, are irrefutable proof of its impact. However, as with all new technologies, discussions arise about potential dangers and the need for regulation. Among the many aspects to consider, this dizzying progress raises a dilemma about the level of accessibility of systems and the potential problems associated with each level.

The rapid progress of AI poses a dilemma about the level of accessibility of systems and the potential problems associated with each level

The accessibility of language models (Large Language Models, Foundational Models) includes both the data used for training and their parameters (weights are the neural network equivalent of source code in software). There are completely open models (such as EleutherAI), others with open weights (such as Meta's Llama), some open, but with restrictions on use (Hugging Face's Bloom), and finally, closed models, accessible only through servers (such as OpenAI). This disparity generates a debate about the potential vulnerabilities of open systems to malicious attacks and misuse of data or models, as opposed to the obvious benefits in fostering scientific and technical collaboration, innovation and verification.

In a paper just published in Science, ten researchers discuss how fear of these potential risks can lead to over-regulation of open models that can inhibit scientific development, favouring large corporations that control closed models that are easier to control, but also harder to verify and regulate. As the authors point out, ‘different regulatory policy proposals can disproportionately affect the innovation ecosystem’. The experience with open source software , such as Linux, demonstrates the potential of open development for economic growth and technological independence.

Excessive regulation of open models can inhibit scientific development, favouring large corporations that control closed models, which are easier to control, but also more difficult to verify and regulate

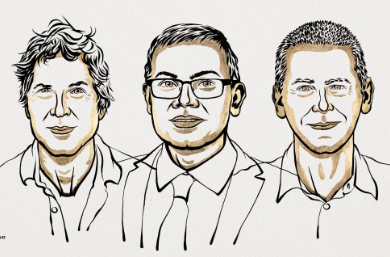

The case of AlphaFold - a protein structure prediction system - exemplifies this dilemma. AlphaFold 2, which is open access, revolutionised biology and is the direct object of the recent Nobel Prize in Chemistry. However, the next system - AlphaFold 3, with capabilities in the field of drug design - remains closed and only accessible under restrictive conditions via a server that does not guarantee data confidentiality. This decision by Google's DeepMind company, of which the two prize winners Denis Hassabis and John Jumper are employees, contrary to the open approach of the other prize winner, the researcher David Baker, from the University of Washington, has generated enormous unease in the scientific community.

The publication of the AlphaFold 3 results in Nature on 8 May 2024, without even the reviewers of the publication having access to the system to verify the published results, contradicts the basic principles of scientific publication, and has been denounced. Our research community expressed its disappointment in an open letter to the editors of Nature, to which more than 1,000 scientists subscribed: ‘Although AlphaFold3 extends the capabilities of AlphaFold2 (...), it was published without the necessary means to test and use the software efficiently (...). This is not in line with the principles of scientific progress, which are based on the ability to evaluate, use and develop existing work. The high-level publication advertises capabilities that remain hidden behind the doors of the parent company’.

A new path seems to be opening up in which large corporations are willing to subvert the scientific publishing system to suit their needs, as further actions by Google DeepMin seem to indicate

The justification later given by Nature in an editorial, based on bioterrorism concerns, is unconvincing when we know that the scientific community will reproduce the results within a few months. An unfortunate situation that seems to open a new path in which large corporations are willing to subvert the scientific publishing system to suit their needs, as further actions by Google DeepMin seem to indicate.

In conclusion, the current wave of artificial intelligence is a disruptive phenomenon

. Its enormous transformative potential raises a legitimate social concern that may end up being transformed into regulations that limit the development of open models necessary for research, technological independence and economic development -such as those developed by the Barcelona Supercomputing Center-National Supercomputing Centre-, favouring closed models controlled by large American corporations.