Reactions: EU institutions agree on artificial intelligence law

After lengthy negotiations, the European Commission, the European Parliament and the Council of the EU - which represents the member states - reached a provisional agreement last night on the content of the 'AI Act', the future law that will regulate the development of artificial intelligence in Europe, the first in the world. The agreement limits the use of biometric identification systems by security forces, includes rules for generative AI models such as ChatGPT and provides for fines of up to 35 million euros for those who violate the rules, among other measures. The text must now be formally adopted by the Parliament and Council before it becomes EU law.

Alfonso Valencia - ley IA EN

Alfonso Valencia

ICREA professor and director of Life Sciences at the Barcelona National Supercomputing Centre (BSC).

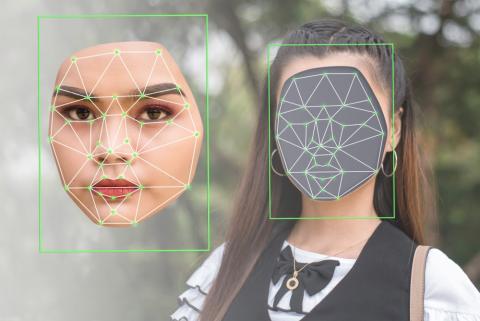

The agreed legislative proposal mainly affects two types of applications: human recognition applications (facial recognition, biometrics, etc.), which are banned with exceptions, and so-called 'high-risk' applications, such as general models (the popular ChatGPT, among others).

With regard to applications of biometric recognition techniques, exceptions are made for law enforcement purposes, making it difficult to know where the use of these technologies will end up in practice.

Regarding the second category of large general models (foundational models) that, trained with large amounts of text, reproduce increasingly advanced features of practical utility (from ChatGPT to GPT4), the paper proposes restrictive measures that require risk assessment, detailed description of their operation or describing all data sources used for their training. Measures that are relatively easy to apply to traditional systems such as those operating in banks or insurance companies, but very difficult or impossible in new AI systems.

The extension of these measures will mean that the current systems of large companies will not be able to operate in Europe, except through IPs from outside Europe (a booming business). In this context it will be very difficult for Europe, where research groups, SMEs and much smaller companies than the US operate, to develop competitive systems. Aware of the damage that these measures could cause both to the companies that develop systems and to those that use them, the text itself speaks of environments in which companies can develop these "secure" systems, which it leaves in the hands of governments. Given that there is neither the initiative, nor the budget, nor the unity of action, nor the technology to create such systems, it seems that the implementation of the proposed measures will definitively leave Europe out of the development of major AI models.

Not the least of the issues contained in this proposal is the repetition of the need for models to adhere to intellectual property laws. Training foundational models requires the use of massive data equivalent to basically the content of the internet. In this huge amount of data it is impossible to automatically detect the level of copyright, let alone pay for the use of each text. This limitation alone is enough to put an end to the development of these large models in Europe. The alternative exists and is reasonable, if we consider that the models are no more than statistical systems that do not reproduce the specific texts, but only generate aggregate statistical characteristics of all the information. Therefore, it would seem reasonable to look for general measures to compensate for the use of information instead of massively applying copyright laws.

In short, Europe is very limited in the use and development of large models, without offering technically and economically viable alternatives.

Paloma Llaneza - ley IA EN

Paloma Llaneza

Lawyer, systems auditor, security consultant, expert in the legal and regulatory aspects of the internet and CEO of Razona Legaltech, a technology consultancy firm specialising in digital identity

What are the most important changes that could affect business and research?

"I think the main impact is that they are going to have to limit the use of AI in decision-making systems based on personal data including behavioural or emotion profiling, as well as the incorporation of risk analysis before putting certain models into production based on their criticality.

What could change for users?

"Seen from the user's side, the use of their data for profiling through AI systems or algorithms aimed at success models that lack transparency and explainability should be limited. In fact, the political agreement on the AI Act coincides with a ruling by the EU Court of Justice declaring the German credit scoring system used by banks and credit institutions unlawful as a fully automated system in breach of the GDPR".

The European Union wants to position itself as a leader in guiding the development of AI in the world. Are these ambitions realistic, and how will this law help to fulfil them?

"Europe has been wanting to position itself as a moral reference for technology for years by putting the human being at the centre through the approval of several rules such as the GDPR, DSA or DMA, but it forgets the problems of regulating and sanctioning companies operating from outside the EU. It is true that the Regulation provides for a system of sanctions but, on a day-to-day basis, users are quite lost when it comes to personal and specific infringements.

We must ask ourselves whether the best way to protect democracy is to sanction companies that include in their operational risk analysis the possible sanctions and decide which ones compensate them, or to encourage the development of competitive tools in Europe that meet our regulatory standards. As long as this does not happen, we will be a colony of the US, which will end up sweeping away our regulation or integrating it, as I say, as just another cost.

Otherwise, the risks of foundational AIs, which are enormous in terms of their penetration and power, have not been addressed in this regulation".

AI is developing very fast, while legislative work is slower. Will this law be able to have a quick and lasting impact?

"No regulation works anymore without technical implementation and standardisation acts. It is in these details that the devil of any regulation is to be found and where the battle must be fought.

In any case, the regulation regulates realities that are already obsolete today or could be managed with already approved regulation. It is designed for business or state developments, but it misses out on generative consumer AI, which is, in fact, the great challenge ahead of us".

Claudio Novelli - ley IA EN

Claudio Novelli

Postdoctoral Research Fellow, Department of Legal Studies, University of Bologna and International Fellow, Digitial Ethics Center, Yale University

Observing the negotiation on the AI Act proposal was like witnessing the construction of a bridge across a vast chasm of uncertainty and potential pitfalls. Compromise was key to reaching an agreement, yet some decisions were tougher than others. Moving away from self-regulation for AI models and opting for direct oversight was a necessary step. Banning most biometric systems was a prudent move. Law enforcement can still use them in specific situations, like preventing terrorism or sexual exploitation, but only under judicial review and independent scrutiny.

This is just the beginning, though. Many details still need to be worked out, like the AI Office's role and how it will work with national authorities. Figuring out how deployers will assess the impact of their AI systems on fundamental rights is another crucial step. The next 24 months will be critical. They will determine whether the EU has truly achieved its goal of leading the way in regulating AI, setting a global benchmark for responsible and innovative AI governance.

Lucía Ortiz - ley IA EN

Lucía Ortiz de Zárate

Pre-doctoral researcher in Ethics and Governance of Artificial Intelligence in the Department of Political Science and International Relations at the Autonomous University of Madrid

Having reached an agreement on the regulation of Artificial Intelligence (AI) is good news for all Europeans and, hopefully, a wake-up call for other countries and powers to follow suit and regulate these emerging technologies. AI is a group of technologies of a transversal nature that can be applied to a wide range of fields, from entertainment to border control, from personnel selection processes in companies to students at university. In other words, when we talk about AI we have to think from chatbots that assist us when we need information to facial recognition systems used to identify people.

The European regulation aims to create a space in which the adoption of these technologies is compatible with fundamental values and rights, as AI can generate major problems related to data privacy, transparency, accountability or even discriminatory situations. We have examples from other countries in the world, such as China, where data are massively collected to reward or punish citizens based on their good or bad behaviour. The EU aims to prevent such uses of AI through a risk-based regulation that sets out very strict requirements for the use of AI applied to high-risk areas such as border control, recruitment processes, etc.

Some risks are considered unacceptable as contrary to fundamental values and rights and will therefore be banned in the EU. This is the case of social scoring systems such as the Chinese case, the use of AI to modify people's behaviour or to exploit people's vulnerabilities (economic situation, age, etc.). The use of real-time facial recognition systems, which is highly controversial, seems to be banned with some exceptions (terrorism, kidnapping, human trafficking, etc.).

Whether the law is effective we will see over time. In principle, the regulation should be flexible enough to adapt to the new technological advances that are occurring faster and faster and, at the same time, sufficiently specific to be able to avoid situations that could be very serious and to sanction when necessary. In any case, it is good news that the EU has opted to regulate AI and place some limits on technologies that, without control, could be terribly harmful, even without us being aware of it.